Over the history of digital health apps we have seen a change in what a “large deployment” looks like. In the past decade a “large deployment” has gone from hundreds of users, to many thousands of mobile app users. While we have kept up with improvements to manage this increasing scale over the past few years, we have also been planning a larger overhaul of CHT infrastructure to meet the needs of robust national scale deployments. With that, I’m excited to share a post on testing proofs-of-concepts for scaling the CHT written by @Nick with contributions from @gareth, @henok, and @mrjones.

It would be great to discuss further with all of you, so please post thoughts and questions below!

Testing Scalability of Proof-of-Concepts

Many apps built using the Community Health Toolkit (CHT) have several hundreds to thousands of users. Over time, Medic and the CHT community noticed a bottleneck when implementing partners add or train many new users – like community health workers, supervisors, and health administrators – simultaneously. A solution to this delay is to increase server resources (scale vertically) to handle the load. However, this server-based solution only works up to a point after which increasing resources, such as memory and processing power, no longer helps.

We hypothesized that we could horizontally scale API and database (CouchDB) to solve our problem with a large number of concurrent replications. This means we would start up new machines that run only the API or database code. That way we could spread the workload across many smaller servers instead, which would allow for less of a bottleneck in processing the requests.

In this post, we cover how our team tested the hypothesis, what the results were, and what we will be doing next to resolve any potential bottlenecks for large deployments of CHT apps.

Architecture

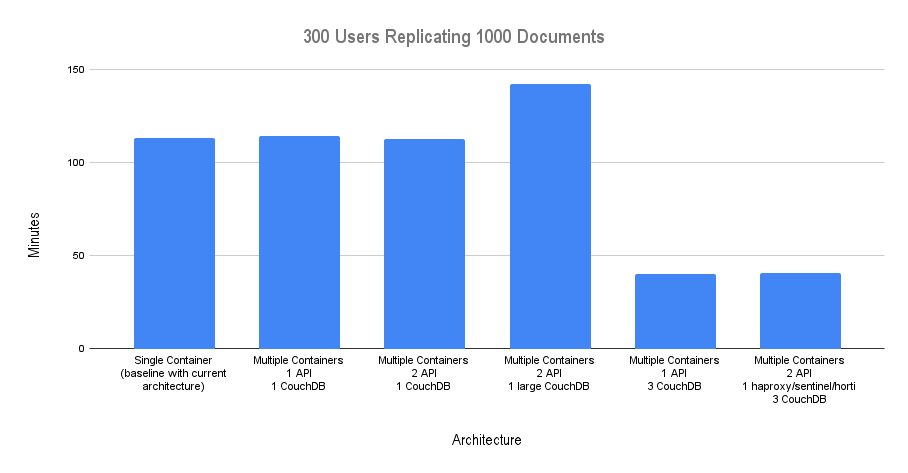

We set up a few different environments to test scalability, specifically how well the CHT can handle many concurrent users syncing data simultaneously. We started with our current architecture, and from there we split all of our services into individual containers. Each container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. After that, we moved on to scaling the API server to have two running on separate containers. From there, we set up an environment where CouchDB, our database, was scaled to have two instances of CouchDB running on separate containers. Finally, we scaled both our API and CouchDB instances. The following environment combinations were created, along with the Amazon Web Service instance sizes:

- Single Container: Current Architecture on a single instance (r4.2xlarge)

- Multiple Containers: Individual containers for each service on a single instance

- Multiple Containers: 2 API (r4.xlarge) and 1 CouchDB

- Multiple Containers: 2 API (r4.xlarge) and vertically scaled CouchDB

- Multiple Containers: 1 API (r4.xlarge) and 3 CouchDB

- Multiple Containers: 2 API (r4.xlarge), 1 container with haproxy, sentinel, horti, 3 CouchDB

Testing Scalability

Our testing suite simulates users replicating for the first time as this workflow puts the infrastructure under the most load. The simulation is run as a node script that uses PouchDB, the same software that handles replication between our client software and server. We scaled up virtual users from 10 to 300 users in most cases. Each user simulated a real user, like a Community Health Worker, with 1,000 database documents to replicate.

Findings

Please note that this is a fabricated stress test for worst case scenarios. The CHT has been successfully deployed to support thousands of users (CHWs) and can handle millions of database documents.

In this chart we show the comparative benefits of the different architectures:

The results of the tests were largely as expected, with the biggest impact being when scaling CouchDB horizontally. We were able to show a reduction in average replication time of 75% when adding one more couchdb node to the cluster.

What’s Next

Based on these findings, we have prioritized horizontal scalability of CouchDB. We are also moving to split our Medic-OS containerization into individual containers based on the process they run (e.g. API, CouchDB, Sentinel). These changes are being developed and released with CHT Core 4.0, which you can read more about in our kickoff post to follow along.