We’d like to do some analysis to see which report fields are being edited most often.

I recognize that for each doc there is a _rev. From what I’ve seen, every time the doc is edited, the _rev property will automatically be updated. Given the example below, the prefix will be incremented (1- will become 2-) and a new guid will be generated (f4a8f.... will be replaced with a new one).

{

"_id": "220eaa15-f8bb-498d-81a6-652de8576b00",

"_rev": "1-f4a8f01dca898099f3b813ae8928215e",

...

}

Based on this thread, I understand that it is possible to see previous revisions (using curl) but not from Fauxton, and even though you might be able to see them through curl, once compaction runs those previous revisions will be deleted anyway.

Is my understanding correct that there’s no reliable way to see previous versions of edited couchdb docs?

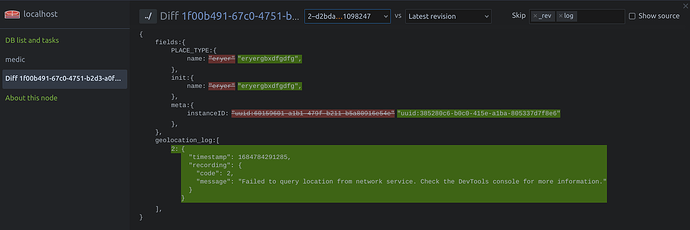

FWIW, couch-photon has a really nice and simple view for diffing specific revisions of a doc:

Unfortunately, this is not super useful in prod (unless Photon is installed on the prod CouchDB instance…). Also, you will still only be able to see revisions that have not been compacted yet…

I think that any longitudinal analysis of field changes would need to be done against Postgres data. However, I am not sure that cht-sync/couch2pg support storing historical records (instead of just the latest version of each doc).

2 Likes

Got it, thanks @jkuester!

A couple more questions:

Practically speaking, what kind of timescale are we seeing compaction run in the real world?

I recognize this is probably highly variable, but just curious if compaction is something that we’d expect to see happening hourly, daily, weekly, bi-weekly, monthly, etc… (i’m assumings it’s more like weekly or monthly than hourly/daily).

Does PouchDB work the same way?

If a CHW creates and then edits a doc multiple times before ever syncing (and then syncs), are all versions synced to the CouchDB or just the the latest one?

couch2pg

Yeah, when couch2pg receives an update to a doc it will delete the existing doc in PostgreSQL (based on _id) and insert the new version… though I imagine (at least for a PoC) we could modify it to not delete anything and create the (unique) index on _id AND _rev instead of just _id.

what kind of timescale are we seeing compaction run in the real world?

Unfortunately, the algorithm Couch uses to automatically trigger compaction (even the simpler algorithm that applies to our 2.x instances) is going to be highly dependent on the characteristics of a particular deployment. It takes things like db size and fragmentation into account, so things could vary depending on how active the instance is and what the ratio is of new vs updated documents. Additionally, compaction runs at the shard level, so different shards in a DB might contain more or less revisions. Probably the only way to get reliable data about this is to run some queries against a couple of production instances. I can say from local testing that with no compaction, Couch will by default store 1000 revisions of a document.

Couch does report in the logs whenever it runs compaction. I had a quick glance at the logs from a large production instance and can just add this anecdote. In 3 days of logs, compaction ran 18 times on the medic-logs db and only 1 time on the medic db.

Does PouchDB work the same way?

For offline users, their local PouchDB runs with auto_compaction enabled. This basically means that no previous revisions are stored locally. So, when syncing back to Couch, I believe that only the latest revision will be sent.

1 Like