What would you advise, client side or server side purging, given our current challenges with document limits?

Sorry, I think I made this more confusing by saying “server-side”. Newer versions of the CHT really just support only one kind of purging (described on the docs page). This purging has both server-side and client-side components, but it is all working together with the same configuration (so there is no choice between one or the other).

Would appreciate guidance on writing purge rules so that we don’t loose any data, our greatest worry

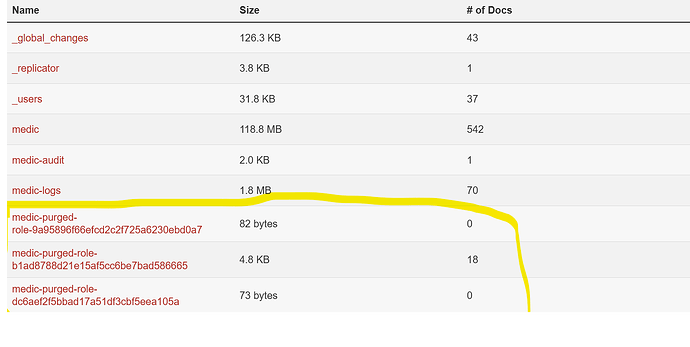

The most important thing to understand about CHT purging is that the documents are removed from the client devices, but they are not deleted from the server. Technically speaking, the medic database on the server is not affected at all by the purging process. Instead, the purging process will separately maintain a list of docs that should be considered “purged” for specific users. These docs will be removed from the client devices for those specific users, but will remain untouched in the medic database on the server. In this way, it is not possible for the purging process to result in data loss (in the sense that the data is gone from the server).

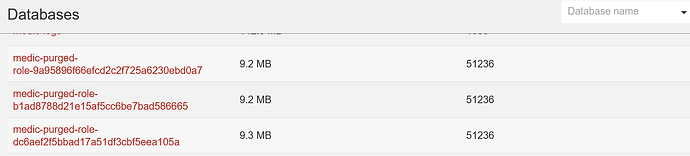

Purged docs will be removed (or not replicated in the first place) from affected client devices. So, if you have a CHW user that currently has access to 11317 documents and 11000 of those docs get purged, then the user will only sync 317 docs when logging into their device. Of course, that means that the user will only have those 317 documents on their device. This can break workflows that depend on existing data (e.g. pregnancy followup tasks may not be triggered if the original pregnancy document is purged from the device).

A good approach, when setting up your purge configuration, is to have a matrix of user roles and the types of data records that get created by your various forms. Then in the matrix you can fill out how long the user needs access to the particular records to perform their workflows (e.g. a pregnancy record might need to remain on a client device for 9+ months, but you might be able to purge a patient assessment form after 1 month (and maybe vaccination records should never be purged)). These time periods are completely dependent on your particular config and the needs of your users, so we do not really have any kind of “recommended” purge configuration. This data matrix can then be referenced when actually writing your purge configuration.