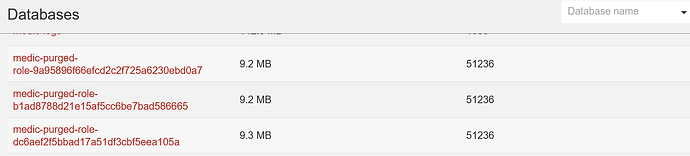

@yuv, @jkuester, checked Fauxon today to see progress of purging status as below:

The app for some users (mainly CHWs) doesn’t load displaying the message, Polling replication data. We had set replication depth for them as 3 (CHW Area, Households and Household Members).

My assumption was that if purging is done, then we will reduce the number of documents to poll, the challenge still exists, any ideas where else to check, the users are frustrated, so am I. Tried to login from my laptop as one of the users and same challenge, had to forget the site to stop the polling and replication screen