Hey @diana,

I was wondering if you missed the logs I posted in my last comment which showed a crash report in the couchdb logs. This shows an error in the medic-sentinel shards with the following message:

[error] 2023-09-17T07:21:07.986973Z couchdb@127.0.0.1 <0.20981.663> -------- rexi_server: from: couchdb@127.0.0.1(<0.9577.664>) mfa: fabric_rpc:all_docs/3 exit:timeout [{rexi,init_stream,1,[{file,"src/rexi.erl"},{line,265}]},{rexi,stream2,3,[{file,"src/rexi.erl"},{line,205}]},{fabric_rpc,view_cb,2,[{file,"src/fabric_rpc.erl"},{line,462}]},{couch_mrview,finish_fold,2,[{file,"src/couch_mrview.erl"},{line,682}]},{rexi_server,init_p,3,[{file,"src/rexi_server.erl"},{line,140}]}]

[error] 2023-09-17T07:21:07.988479Z couchdb@127.0.0.1 <0.32146.663> -------- rexi_server: from: couchdb@127.0.0.1(<0.9577.664>) mfa: fabric_rpc:all_docs/3 exit:timeout [{rexi,init_stream,1,[{file,"src/rexi.erl"},{line,265}]},{rexi,stream2,3,[{file,"src/rexi.erl"},{line,205}]},{fabric_rpc,view_cb,2,[{file,"src/fabric_rpc.erl"},{line,462}]},{couch_mrview,finish_fold,2,[{file,"src/couch_mrview.erl"},{line,682}]},{rexi_server,init_p,3,[{file,"src/rexi_server.erl"},{line,140}]}]

[error] 2023-09-17T07:21:07.988633Z couchdb@127.0.0.1 <0.18349.664> -------- rexi_server: from: couchdb@127.0.0.1(<0.9577.664>) mfa: fabric_rpc:all_docs/3 exit:timeout [{rexi,init_stream,1,[{file,"src/rexi.erl"},{line,265}]},{rexi,stream2,3,[{file,"src/rexi.erl"},{line,205}]},{fabric_rpc,view_cb,2,[{file,"src/fabric_rpc.erl"},{line,462}]},{couch_mrview,finish_fold,2,[{file,"src/couch_mrview.erl"},{line,682}]},{rexi_server,init_p,3,[{file,"src/rexi_server.erl"},{line,140}]}]

[error] 2023-09-17T07:21:07.990486Z couchdb@127.0.0.1 <0.28804.663> -------- rexi_server: from: couchdb@127.0.0.1(<0.9577.664>) mfa: fabric_rpc:all_docs/3 exit:timeout [{rexi,init_stream,1,[{file,"src/rexi.erl"},{line,265}]},{rexi,stream2,3,[{file,"src/rexi.erl"},{line,205}]},{fabric_rpc,view_cb,2,[{file,"src/fabric_rpc.erl"},{line,462}]},{couch_mrview,finish_fold,2,[{file,"src/couch_mrview.erl"},{line,682}]},{rexi_server,init_p,3,[{file,"src/rexi_server.erl"},{line,140}]}]

[error] 2023-09-17T07:24:34.801860Z couchdb@127.0.0.1 <0.28082.664> -------- CRASH REPORT Process (<0.28082.664>) with 2 neighbors crashed with reason: no case clause matching {mrheader,727,0,{223139,[],223572},nil,[{{233022,{114,[],6290},10342},nil,nil,687,0},{{367574,{2064,[],251444},133857},nil,nil,704,0},{nil,nil,nil,0,0},{{367822,{2,[],68},160},nil,nil,312,0},{{385322,{186,[],15187},17707},nil,nil,704,0},{{395335,{186,[{0,186,0,0,0}],9585},9828},nil,nil,704,0},{nil,nil,nil,0,0},{{401164,{114,[],2750},6066},nil,nil,687,0},{{408428,{114,[true],6752},7063},nil,nil,687,0},{{414693,{114,[],2978},6512},nil,nil,687,0},{{420837,{114,[],2752},6124},nil,nil,727,0},{{433374,...},...},...]} at couch_bt_engine_header:downgrade_partition_header/1(line:225) <= lists:foldl/3(line:1263) <= couch_bt_engine:init_state/4(line:790) <= couch_bt_engine:init/2(line:159) <= couch_db_engine:init/3(line:722) <= couch_db_updater:init/1(line:32) <= proc_lib:init_p_do_apply/3(line:247); initial_call: {couch_db_updater,init,['Argument__1']}, ancestors: [<0.23656.664>], message_queue_len: 0, messages: [], links: [<0.23656.664>,<0.23623.664>], dictionary: [{io_priority,{db_update,<<"shards/95555553-aaaaaaa7/medic-sentinel.1...">>}},...], trap_exit: false, status: running, heap_size: 1598, stack_size: 27, reductions: 817

[error] 2023-09-17T07:24:34.802836Z couchdb@127.0.0.1 <0.21583.664> -------- CRASH REPORT Process (<0.21583.664>) with 2 neighbors crashed with reason: no case clause matching {mrheader,821,0,{484276,[],92028},nil,[{{522637,{291,[],182204},120843},nil,nil,821,0},{nil,nil,nil,0,0},{nil,nil,nil,0,0},{{487489,{300,[],40298},29129},nil,nil,816,0},{nil,nil,nil,0,0},{nil,nil,nil,0,0},{{248289,{103,[{174308209776416,103,1691914099589,1693148200881,294984006818723137501912846}],11388},8951},nil,nil,791,0},{nil,nil,nil,0,0},{{501916,{2778,[],172744},153415},nil,nil,816,0}]} at couch_bt_engine_header:downgrade_partition_header/1(line:225) <= lists:foldl/3(line:1263) <= couch_bt_engine:init_state/4(line:790) <= couch_bt_engine:init/2(line:159) <= couch_db_engine:init/3(line:722) <= couch_db_updater:init/1(line:32) <= proc_lib:init_p_do_apply/3(line:247); initial_call: {couch_db_updater,init,['Argument__1']}, ancestors: [<0.12235.664>], message_queue_len: 0, messages: [], links: [<0.12235.664>,<0.11643.664>], dictionary: [{io_priority,{db_update,<<"shards/3fffffff-55555553/medic-sentinel.1...">>}},...], trap_exit: false, status: running, heap_size: 987, stack_size: 27, reductions: 809

[error] 2023-09-17T07:28:07.369064Z couchdb@127.0.0.1 <0.3462.665> -------- CRASH REPORT Process (<0.3462.665>) with 2 neighbors crashed with reason: no case clause matching {mrheader,821,0,{484276,[],92028},nil,[{{522637,{291,[],182204},120843},nil,nil,821,0},{nil,nil,nil,0,0},{nil,nil,nil,0,0},{{487489,{300,[],40298},29129},nil,nil,816,0},{nil,nil,nil,0,0},{nil,nil,nil,0,0},{{248289,{103,[{174308209776416,103,1691914099589,1693148200881,294984006818723137501912846}],11388},8951},nil,nil,791,0},{nil,nil,nil,0,0},{{501916,{2778,[],172744},153415},nil,nil,816,0}]} at couch_bt_engine_header:downgrade_partition_header/1(line:225) <= lists:foldl/3(line:1263) <= couch_bt_engine:init_state/4(line:790) <= couch_bt_engine:init/2(line:159) <= couch_db_engine:init/3(line:722) <= couch_db_updater:init/1(line:32) <= proc_lib:init_p_do_apply/3(line:247); initial_call: {couch_db_updater,init,['Argument__1']}, ancestors: [<0.14500.664>], message_queue_len: 0, messages: [], links: [<0.14500.664>,<0.12117.664>], dictionary: [{io_priority,{db_update,<<"shards/3fffffff-55555553/medic-sentinel.1...">>}},...], trap_exit: false, status: running, heap_size: 987, stack_size: 27, reductions: 809

[error] 2023-09-17T07:28:07.376409Z couchdb@127.0.0.1 <0.2324.665> -------- CRASH REPORT Process (<0.2324.665>) with 2 neighbors crashed with reason: no case clause matching {mrheader,727,0,{223139,[],223572},nil,[{{233022,{114,[],6290},10342},nil,nil,687,0},{{367574,{2064,[],251444},133857},nil,nil,704,0},{nil,nil,nil,0,0},{{367822,{2,[],68},160},nil,nil,312,0},{{385322,{186,[],15187},17707},nil,nil,704,0},{{395335,{186,[{0,186,0,0,0}],9585},9828},nil,nil,704,0},{nil,nil,nil,0,0},{{401164,{114,[],2750},6066},nil,nil,687,0},{{408428,{114,[true],6752},7063},nil,nil,687,0},{{414693,{114,[],2978},6512},nil,nil,687,0},{{420837,{114,[],2752},6124},nil,nil,727,0},{{433374,...},...},...]} at couch_bt_engine_header:downgrade_partition_header/1(line:225) <= lists:foldl/3(line:1263) <= couch_bt_engine:init_state/4(line:790) <= couch_bt_engine:init/2(line:159) <= couch_db_engine:init/3(line:722) <= couch_db_updater:init/1(line:32) <= proc_lib:init_p_do_apply/3(line:247); initial_call: {couch_db_updater,init,['Argument__1']}, ancestors: [<0.10794.664>], message_queue_len: 0, messages: [], links: [<0.10794.664>,<0.21046.664>], dictionary: [{io_priority,{db_update,<<"shards/95555553-aaaaaaa7/medic-sentinel.1...">>}},...], trap_exit: false, status: running, heap_size: 1598, stack_size: 27, reductions: 817

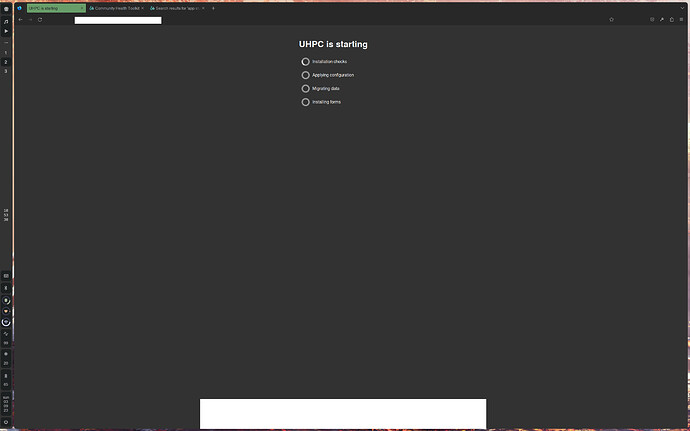

Please let me know if this can help diagnose the issue. All other containers seem to be running without a hitch.

cc: @sanjay @yuv