I am currently hosting an application for the first time using this tutorial

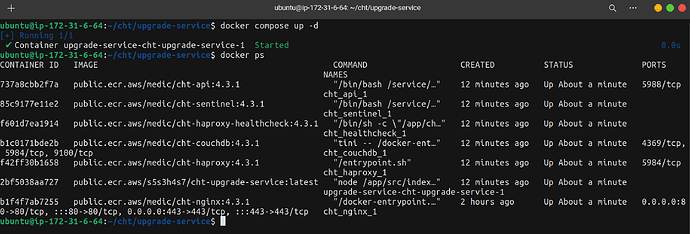

I have however noticed that the cht-net volume is missing in as much as everything else seems to be set up. I am using amazon EC2 instance. Is there anything I am missing?

Hi @kembo

What URL are you getting this error on?

To get some clarity, it would be super helpful to have logs from your containers. There is a handy script that will help you get these logs: cht-core/scripts/compress_and_archive_docker_logs.sh at master · medic/cht-core · GitHub

I am getting the error on my production IP address. I am not sure if there is a way to share the production logs file should I generate them. Can I screen shot outputs for docker logs <container name> -n 50?

I also noticed that unlike @mrjones video tutorial (see 3:46) that has cht-net and upgrade-service created, mine only has the upgrade-service one. Is that significant in any way?

@kembo ,

Thanks for the update!

I am not sure if there is a way to share the production logs

Here’s detailed documentation about how to run the compress_and_archive_docker_logs.sh script. If you’re able to run docker commands on the server, then you can run the script. This will for sure be the easiest way for us to see all the details.

I also noticed that unlike @mrjones video tutorial (see 3:46) that has cht-net and upgrade-service created, mine only has the upgrade-service one. Is that significant in any way?

cht-net will only be created once, so likely this existed already from a prior attempt of yours - this should be fine. To double check, you can run docker network ls --filter "name=cht".

Hello @mrjones and @diana here are the logs cht-docker-logs-2024-01-11T07.10.23+00.00.tar.gz - Google Drive

Thanks for the documentation on sharing logs

Hi @kembo

Looking at the logs, it seems that CouchDb authentication doesn’t work:

[warning] 2024-01-11T07:05:25.832125Z couchdb@127.0.0.1 <0.481.0> 7dc020750a couch_httpd_auth: Authentication failed for user medic from 172.19.0.3

I’m not exactly sure what’s wrong here, but I have one suspicion.

Looking at your cht-credentials path:

- cht-credentials:/opt/couchdb/etc/local.d:rw

I wonder if this path is indeed writeable. Can you try resetting containers and select a different cht-credentials mount path?

I am not really sure how to go about your suggested method of selecting different cht-credentials mount path

I have also severally tried restarting the whole process including removing all containers and network and starting afresh

@diana do you have any more ideas I could try out?

Can you just try not mounting the cht-credentials to a specific location on disk and see what happens? This will probably require to redo the containers, including the volumes for CouchDb data.

@kembo

Did u use the default docker compose files from medic for this deployment?

Can you please share the docker-compose files that you’ve used?

Thanks

I used cht provided ones in this docs

Hi @kembo

Thanks for answering. It seems like you indeed haven’t made any changes to the docker-compose files.

Did you ever try to restart the process and while doing so, changed the couchdb password?

Hi @diana , no I haven’t restarted the processes and changed the password. The closest to this is me killing and removing all containers and deleting the directory and starting from scratch. This always gives the same error

Hi @kembo

What command do you run to remove all containers?

Hi @diana

I did docker kill $(docker ps -a -q) then docker rm $(docker ps -a -q)

I additionally deleted all files by rm -rf cht/ and also the network docker network rm cht-net

I have done this severally trying to start over but the error persists

Hi @kembo.

Can you please run docker volume ls and check if you have volumes like ***_cht_credentials?

That is where CouchDb persists passwords, and accidentally using an previous volume could produce your result. Can you please delete all volumes that look like that and try to start containers again?

A combination of this and deleting all other containers worked. These are debug notes for anyone who ever faces this issue:

- Before running cht docker containers, ensure you prep you ec2 instance by trying bare metal access via nginx so as to isolate the issue. This tutorial might help with that

- While deleting folders and containers, don’t forget to delete network and credentials volumes. View networks by

docker network lsand volumes bydocker volume lsdelete volumes bydocker volume rm vol-nameand networks bydocker network rm net-name - Primarily the issue may always be due to issues with couchdb authentication